Tag Archives: Katonah Homes for Sale

Facebook Graph Search: Why This Could Be So Important for the Future | Katonah Realtor

Last week Facebook launched Graph Search. This is an attempt to turn Facebook into Google – i.e. make it a place where people go to ask questions, but with the supposedly added bonus that the information you receive is endorsed by people you know rather than people you don’t.

This is a very important step, not just for Facebook, because it could come to be understood as one of the critical opening skirmishes in the Battle of Big Data. How it plays-out could have enormous implications for the commercial future of many social media properties, including Google.

This is how the Battle of Big Data squares-up. On the one hand you have platforms, such as Google and Facebook, amassing huge behavioural data sets based on information that users give out through their usage of these infrastructures. Googlebook then sells access to this data gold mine to whom-ever wants it. On the other hand you have the platform users, who, up until this point, have been relatively happy to hand-over their gold. The reason for this is that these users see this information as being largely inconsequential, and have no real understanding of its considerable value or the significant consequences of letting an algorithm know what you had for lunch. The fisticuffs begins when these users start to understand these consequences – because in most instances, their reaction is to say “stop – give me back control over my data.”

There is an enormous amount riding on this. If users start to make demands to repatriate, or have greater control over, their data – this delivers hammer blows to the commercial viability of Googlebook type businesses, who are either making huge amounts of money from their existing data goldmine, or have valuations that are based on the future prospect of creating such goldmines. It also starts to open-up the field for new platforms that make data privacy and control a fundamental part of their proposition.

Initial reports from the field are not encouraging (for Facebook). There were immediate issues raised about privacy implications which Facebook had to pacify (see this Mashable piece) and significant negative comment from the user community – as reported in this Marketing Week article. See also this further analysis from Gary Marshall at TechRadar. It will be very interesting to see how this plays-out.

From another perspective, I think this announcement illustrates what Facebook believes is its advantage over Google – i.e. its sociability and the fact that it can deliver information that is endorsed by people that you know. The interesting thing about this is that the power of social media lies in its ability to create the processes that allow you to trust strangers. The value of the information can therefore based on the relevance or expertise of the source – not the fact that they are a friend. Google is the master of this in a largely unstructured way, and services such as Amazon or even TripAdvisor can deliver this via a more structured process. Facebook can’t really do this, because it neither has Google level access to enough broad-spectrum data, not does it have processes relevant to specific tasks (Trip Advisor for travel – Amazon for product purchase).

$7.66T Of Fiscal And Monetary Stimulus | Katonah NY Real Estate

My 10-in-1 Content Creation Strategy | Katonah Real Estate

Content creation calendars and schedules are the bane of most serious bloggers’ and content managers’ lives, depending on which side of the creative block you’re on.

I straddled this fine line on many occasions until my Eureka moment. Having amalgamated my home radio and video studios I realised that I could double up on content creation with my business-consultant partner, a content reservoir of genius proportions.

Soon we had discovered a 3-in-1, then a 6-in-1, and finally a 10-in-1 content creation strategy.

When I say “radio and video studio” (actually my third bedroom), be assured it’s not exactly state of the art, although I have slowly acquired suitable equipment and created a workable dual studio.

In saying this, anyone with a computer, some sort of USB audio interface/mixer, a reasonable microphone and digital video camera or DSLR can achieve the same results. In this article I assume you are familiar with your gear so I’m not going to go into any detail on how to use each piece of equipment in the process.

Time costs!

One of the most valuable, and rarest assets of a successful business consultant is time. To maximise the genius of my partner, when time is in such short supply, is a hectic operation usually resulting in a minimal flow of great content. This is where my Eureka moment has paid incredible dividends and saved many hours in the generation of multiple pieces of content at once.

Because we use a joint audio and video, green-screen studio, when we sit down and record a session, we create both an audio and a video recording simultaneously. The following ten points outline the quality content that we create from each five-minute recording session.

We now have this down to such a fine art that we can do six, five-minute recordings in 40 minutes. For me as the content creator, this is heaven, as it enables me to work in my genius. (A little side note here: your genius is simply working in your passion and talent, and I believe you need to be doing this for 80% of your working time.)

How it works

So, it all starts with one content creation session—just one!—where we have learned to maximise both time and genius. Of course there is preparation required to make the session go smoothly, and a good knowledge of your field of expertise is essential, but once we’re in studio, this is how the magic happens.

- The primary piece of content is a video for uploading to our YouTube channel and if we choose, we upload it to iTunes as a video podcast. We also embed the same video on our blog at MurrayKilgour.com. A well-prepared, quality video is the basis for this whole process. We use either a script or a series of bullet points to make the recording. I personally enjoy using a script with a DIY teleprompter, because of my radio background. Cheesycam.com is an awesome resource for DIY ideas and equipment—a lot of it DIY or reasonably priced new gear.

- The recorded audio track becomes a podcast which is embedded on our blog, and most often is uploaded to our Living on Purpose iTunes podcast channel as well. There are many other podcast sites to upload to, but we choose just iTunes. If you are unable to create video, the podcast can become the audio for a Slideshare video presentation, so give the audio the same good preparation as you would a video.

- We send the mp3 audio recording to a transcription contractor hired through eLance.com for transcription at $2 per recording. From this transcript, we create a blog post for our site, a guest post for another website, or an article for a site like ezinearticles.com. This invaluable piece of text is also used as a caption or transcript with our videos on Youtube for SEO purposes. Because it’s accurate, we gain the additional traction of having hearing-disabled people able to enjoy your video using the Youtube subtitles feature.

- The video we have created, if it’s not placed on our YouTube channel, can now form part of a multi-part video ecourse. We use an Aweber autoresponder to give this away for free and gain subscribers to our blog, but it can be monetized in the form of a paid video ecourse. You can determine the value or purpose of the content here.

- We again take the transcribed text and repurpose it into a ten-part ecourse delivered in the same way as the video ecourse: as a bonus for signing up as a subscriber to the blog. This method has been extremely successful—we’ve signed up thousands of subscribers to our blog this way.

- The transcribed text now adds real value when it is compiled into a section or chapter of an ebook to be used as a giveaway or sold on the blog as a free download. This is where the value of the method comes in, because many bloggers battle to get into writing an ebook. We edited, added and modified a lot of the text to create an ebook, but what this method did was give us a great quantity of raw material to work with. We had created more than 140 podcasts by the time we woke to the fact that we could compile a quality ebook using that material.

- I am in the fortunate position of being a breakfast show producer for a local radio station, so the podcast becomes a regular slot on our community radio station, Radio CCFm, which has 250 000 listeners. But before you say this is a privileged position, I can assure you that, as a producer, I can say most local radio stations are always looking for quality content, especially if it is free. So short podcasts with a good intro and outro may become a regular feature on radio stations and give good traffic to a website.

- With the advent of HD video DSLRs it is possible to produce high-quality video footage for TV programs. We repurpose our five-minute content creation session again in the form of a short TV program for a local community TV station, Cape Town Television. If it’s quality content and free, TV stations will take your show—especially if it’s relevant to their viewers.

- When we repurpose the transcribed text into an ebook, the audio becomes part of an audio book. You might say that this is pushing it, but I use the audio as a companion free audio book to the photography ebook I sell on my website. It’s a bonus for the buyer and for me, because I didn’t have to do anything extra to create it.

- Finally, blog posts, audio, and video make an amazing weekly or monthly newsletter. I do this using Aweber templates, which are free with the subscription. We try to do it on a two-weekly basis, as we don’t always have enough content for weekly mailings. It works perfectly for a monthly newsletter and I would advise this when you’re starting out. The amount of content you generate will determine the frequency.

Ten points sounds good, and I thought that adding an eleventh point might be a bit much, but here’s a bonus idea. What we’ve done is created a boxed DVD set for offline and online sales as training modules. Not all people are excited about online, and some like a physical product in their hands. In our business we use all of the above content in its different forms as part of a DVD boxed series for sale to our coaching clients. They love it and we love it—especially the time it takes to create!

Unlimited content

There are no limits to how you can use your content if you begin with the end in mind, but the emphasis must be on quality content. When you sit down in front of the camera and microphone, think “end product,” and design your process to get the most out of the content creation session. I’m sure that most people can easily create seven of these ten pieces of content out of just one five-, ten-, or even 30-minute recording session.

So, think big in your content creation, begin with the end in mind, and maximize your time and effort to produce content that will attract the best traffic and convert those people into buyers. Your success will result from the quality of content you produce. So give it your best!

Florida foreclosures hit highest level in 2012, defy housing rebound | Katonah NY Real Estate

Guide to Bringing Your WordPress Blog Up to Today’s Google Algorithm Standards | Katonah Realtor

Keeping up with Google’s ever-changing algorithm updates on WordPress blogs requires constant monitoring and adjustments, which many webmasters fail to keep up with. Following the Penguin algorithm update, millions of bloggers experienced a halt or decrease in traffic.

Although keeping up with industry trends does require frequent updates to continue performing well in search, most tasks are not time consuming at all. This guide will help you understand recent changes to Google’s algorithm and how to update your WordPress blog to follow the new industry standards.The Penguin and Panda Revolution

The Penguin and Panda algorithm updates targeted low-quality websites full of duplicate content, spammy linking, and poor user experience. WordPress is a wonderful content management system and blogging platform out-of-the-box, but it does create massive amounts of duplicate content.

The days of large scale link exchanges and linking to unrelated, low-quality websites were abruptly ended by the Panda update. In previous years, many bloggers would create low-quality sites with poor internal linking structure, few quality pages and banner ads taking up majority of the pages, which was halted by Penguin. Many bloggers, especially personal bloggers, were left wondering what to do and how to monetize their blogs.

Link Building for Blogs in the Post-Penguin World

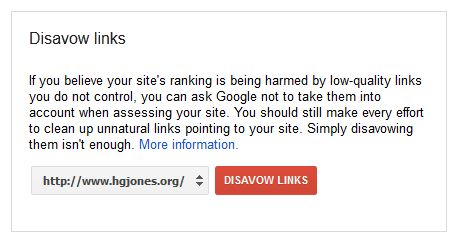

Sure, the days of quick and easy links are over, but this doesn’t mean there is any less value to link building. In fact, high-quality, relevant links are valued more than ever. Before building new links, it is imperative to go back and fix your current link profile. Start out by pulling a backlink report of your website. Sift through each of the results, identifying any low-quality or irrelevant links. Contact each webmaster to remove the links. If you cannot find contact information or the webmaster refuses to remove the links, consider using Google’s new disavow links tool.

Now that you have removed all low-quality links pointing to your website, take a look at who you are linking to. Many personal bloggers sell text link ads and sponsored posts for extra income. These days are nearing their demise. Your PageRank is not only determined by inbound links, but also outbound links.

Unless implicitly stated by the advertiser, I would highly recommend adding the NoFollow attribute to any paid links on your website. Let’s say you own a travel blog, and a travel company purchased a sponsored post from you with the anchor text “cheap holiday travel packages.” You would change the markup for the link to look like this:

cheap holiday travel packagesThis will remove any value passed on from your website to the advertiser, and will help your website conform to Google’s webmaster quality guidelines for link schemes.

Fear not, your blog can still be monetized. Consider signing up for Google’s display advertising network: AdSense. Offering display advertising options is the safest way to continue monetizing blogs. After all, Google wouldn’t offer it if it would hurt websites. Be careful not to overdo it with the display ads.

Websites with 50 percent or more advertisement saturation will be penalized, so placement and ad quality will be key to allowing effective ads that will make you money. Consider looking into conversion optimization and a/b testing to find which ads perform best in different spots on your website, and be sure to filter the types of ads that can be displayed so they are relevant to your audience. YouTube videos can also be monetized, so consider starting a YouTube channel and incorporate video into your blog.

Although most link building practices have been blacklisted by Google, there are still many ways to build links to blogs. Instead of exchanging links on lengthy links pages, consider exchanging guest posts with highly relevant blogs. Write high-quality, enticing content that other websites will want to reference with links. Sign up for Google Plus and set up Authorship.

Look for websites containing recommended blog lists relevant to yours, and inquire about getting listed. Review products within your industry, and use social media to let those companies know you reviewed their products. They may include your review in their list of testimonials. Attend or exhibit at industry events which list attendees on the event’s website with links. Become a thought leader in your niche by creating and promoting linkable assets like white papers, guides, videos, ebooks, and best practices.

Setting up Your Blog for SEO

Optimizing WordPress blogs requires a few plugins and configurations to meet today’s industry standards. Start off by downloading and installing a plugin called WordPress SEO. This is the best SEO plugin available for WordPress, and it has an import feature to import any settings from other SEO plugins.

Download and activate another plugin called W3 Total Cache. This is the best caching plugin available, and works in unison with WordPress SEO. W3 Total Cache will help decrease page loading speeds by creating a cache of each page. Any time you mark a comment as spam, save a draft of a post, or click off the page editor, WP creates a duplicate copy of those pages.

Using the WP-Optimize plugin will purge all those unnecessary copies, which will save server space and speed up your website. WP-PageNavi is another must-have plugin for optimizing your pagination by adding the rel=prev and rel=next attributes. This will help your website be indexed more efficiently and increase crawl depth.

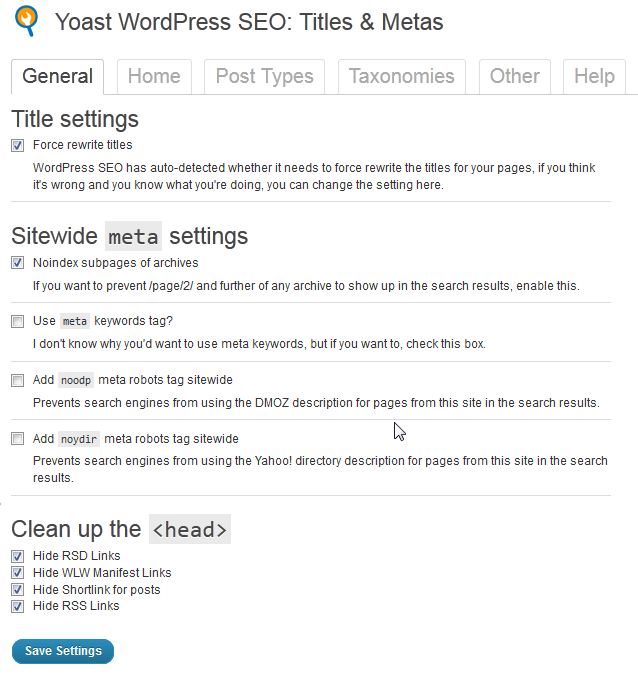

Once all those plugins are installed and activated, you will need to configure them. Use the WordPress SEO plugin to set up Google and Bing Webmaster Tools from the “Dashboard” menu on the plugin settings. Next, go to the Titles &Metas menu. On the General tab, check the box to force rewrite of titles if your custom title tags are not showing up. Check the box to Noindex subpages of archives. Check all four boxes to clean up the head by hiding RSD links, WLW manifest links, shortlinks, and RSS links.

On the Home tab, write a custom title tag and meta description for your homepage. You will also want to go through all pages and posts on your site and write custom titles and descriptions for each using the post editor. Enter in the URL of your blog’s G+ brand page in the Google Publisher Page field. This will set up the rel=publisher attribute for your homepage and connect your blog to your G+ page.

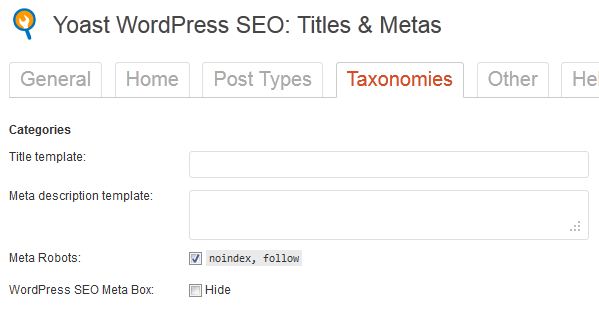

Once all those options are set up, go to the Taxonomies tab. WordPress creates massive amounts of duplicate content through taxonomies. Every time a category, tag, date, or author archive page is indexed, it creates a duplicate copy of each of those posts.

Check the box to noindex, follow the categories, tags, and format. Go to the Other tab and noindex, follow the author archives and date archives. Don’t worry about crawl depth from doing this. As long as you have optimized pagination set up through the WP-PageNavi plugin, your website will still be crawled effectively. I recently ran an experiment on noindexing taxonomies in WordPress to test this theory out.

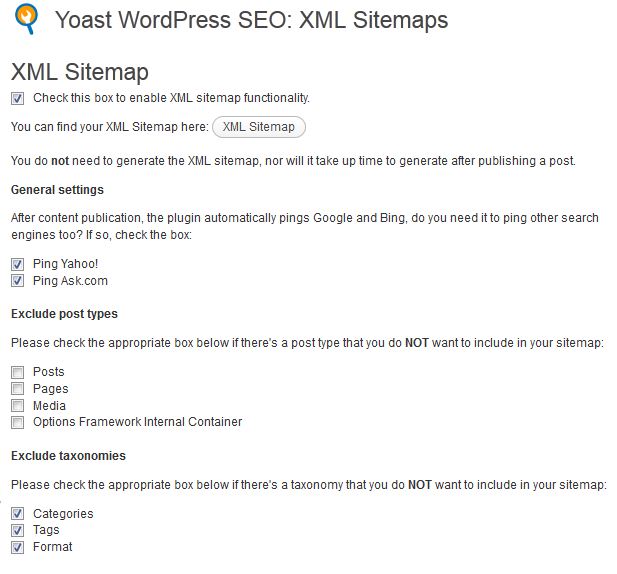

Next, go to the XML Sitemaps menu in the WordPress SEO plugin. Check the box to enable XML sitemap functionality, and submit your sitemaps in Google and Bing Webmaster tools. Check the boxes to ping Yahoo! and Ask.com. Check the box to exclude taxonomies for categories, tags, and format.

Now go to the Permalinks menu and check the boxes to strip category bases and remove ?replytocom variables. You will also want to go to the WordPresspermalink settings and strip categories and datestamps out as well. On the Internal Links menu, check to enable breadcrumbs and implement breadcrumbs on your posts. A snippet of code will be provided in the plugin.

Setting up G+ Authorship

Setting up Google Plus authorship is very quick and easy on WordPress sites. Download and install a plugin called Google Author Link. Under the settings menu for the Google Author Link plugin, select an author for the homepage of your website. Now go to the Users menu in the WP dashboard and edit each user’s profile. Add in the URL to each author’s G+ profile. Next, ask each author to add a URL to your website on the “Contributor To” section of their G+ profile. Authorship is now set up on your blog.

Setting up Microdata

Microdata is still a relatively new concept, first introduced with HTML5. Schema.org is a joint project by Google, Yahoo and Bing to help webmasters mark up their websites so search engines can better understand their content. Bing and Yahoo give a boost in rankings just for setting it up while Google changes the appearance of your listings in search engine result pages to increase clickability.

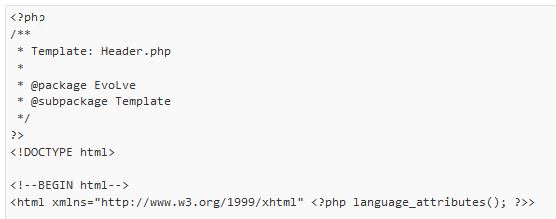

Before setting up microdata, you will need to change the doctype of your website to the HTML5 format. To do this, go to Appearance>Editor. Select the header.php file. At the very top of the header.php file, you will see a doctype. If it is not already in HTML5 format, change the doctype to <pre><code><!DOCTYPE html></code></pre>. After updating the file, check your website to make sure this did not cause any compatibility issues.

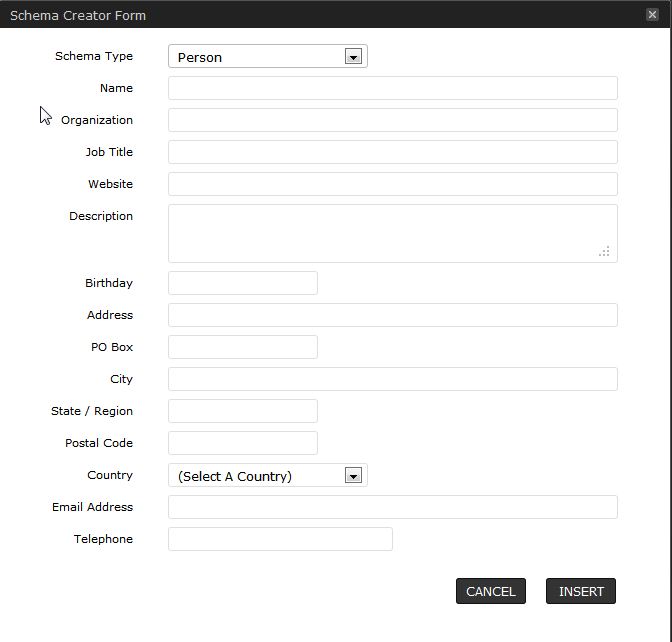

Now that your website is HTML5 compatible, you will need to download and install a plugin called Schema Creator by Raven. Once activated, the plugin will automatically add schema.org microdata throughout your website.

Setting up microdata is as simple as that. If you would like to add in more microdata, go to your post editor or page editor, and you will notice a small icon with the letters “SC” in it at the top of the editor. This tool will allow you to add in microdata for people, products, events, organizations, movies, books, and reviews. It will create a short code and place it within the page for you.

A Few More Quick Tips

Now that your website conforms to today’s Google algorithm standards, there are a few other simple maintenance activities to keep in mind:

Always update your version of WordPress and all plugins as soon as updates are available. If you fail to keep up with updating plugins and versions, you may run into compatibility issues later down the road that will break your website.

Also, make an effort to publish new content at least once per month. Google loves fresh content, and favors websites that are updated frequently. If you get lazy about posting regularly, your traffic and rankings will suffer.

One final piece of advice is to monitor your analytics and webmaster tool accounts at least once per month. This will help you monitor technical issues that may arise as well as traffic drops. If you notice any abnormalities or receive warning messages in webmaster tools, act on these issues in a timely fashion.

Home Owners Reluctant to Sell; Inventories Fall | Katonah NY Realtor

Inventory levels of for-sale homes at the end of 2012 were down 17.3 percent from year-ago levels, reaching the lowest level in more than five years, Realtor.com reports. In some areas, inventories have dropped 68 percent over the year.

“It’s been a buyers’ market for a while. Sellers have been reluctant to put their homes on the market,” says Steve Berkowitz, chief executive of Move Inc., which operates Realtor.com. As housing numbers roll out for January and February in the coming weeks, these will be notable to watch because they’ll provide early clues about buyer traffic and sellers’ expectations, Berkowitz says.

For-sale inventories dropped the most year-over-year in December 2012 in the following metros:

- Sacramento, Calif.: -68%

- Stockton-Lodi, Calif.: -65%

- Oakland, Calif.: -64%

- San Jose, Calif.: -52%

- Seattle-Bellevue-Everett, Wash.: -45%

- San Francisco: -43%

- Ventura, Calif.: -43%

- Riverside-San Bernardino, Calif.: -41%

- Los Angeles-Long Beach, Calif.: -40%

- Orange County, Calif.: -39%

Source: Realtor.com and “Housing Inventory Ends Year Down 17%,” The Wall Street Journal (Jan. 16, 2013)

Tips for Gen X and Gen Y Home Buyers | Katonah Realtor

According to a recent survey, people who belong to the Generation X and Generation Y demographics haven’t been deterred by the housing market downturn at all.

A Better Homes and Gardens Real Estate survey found that 75 percent of Gen X and Y respondents believe owning a home is a key indicator of success; 69 percent said the recent housing downturn made them more knowledgeable about homeownership than their parents were at their age.

And it turns out that Gen X-ers and Y-ers are more motivated than some older generations give them credit for. The survey revealed that Gen X-ers and Gen Y-ers are willing to take second jobs (40 percent said they would) or move in with their parents (23 percent) in order to buy into the American Dream of owning a home.

The real estate market during the past five years was certainly scary, especially for younger and less experienced home buyers. And so, a lot of people in Gen X and Gen Y sat on the sidelines. But the market has definitely bounced back, and many believe that now is a great time to buy. You just have to be savvy about it.

Here are five tips to help Gen X-ers and Gen Y-ers buy into the American Dream.

Have a five-year plan

Unlike the boom years, don’t assume a home purchased today will appreciate in value within five years. If you’re unsure about your five-year plans, it’s better to rent.

Use technology creatively

It’s well-documented that Gen X-ers and Gen Y-ers start their home search online. Real estate listings sites, mortgage calculators and valuation tools such as Zillow’s Zestimate® home value are typically places a buyer starts. But, once you’re in the market, there are tons of online resources. Less obvious tools, such as Google Street View, can help, too. It once helped a client realize that the home she wanted to buy in San Francisco’s Hayes Valley neighborhood may not be as safe as she thought. Google Street View revealed that there were previously bars on the windows of the ground-floor apartment.

Beware of information overload

Using the Internet and apps, home buyers today have an unprecedented amount of data available. Sometimes, however, it’s too much and can cause the buyer to shoot themselves in the foot. For example, a buyer might learn that the seller stands to make a 10 percent profit in a short amount of time. Even though the profit is in line with current market values, that information might cause the buyer to make a low offer and kick themselves a month later for missing out on a great house.

Don’t assume you don’t need a real estate agent

Because so much information is online, many Gen X-ers and Gen Y-ers might think they can buy a home on their own. However, the role of the agent is no longer about finding the listings. It’s about presenting the offer and getting it accepted, getting through inspections and getting the deal done. A real estate transaction can go 50 different ways now. A good agent will steer a buyer on the right path. A savvy agent will know the ins and outs of any local market better than an uninformed buyer with a full-time job and family. It’s their business to be in the know, and it’s what they do all day long. Experienced agents will have a strong network in the local market that can give you the added edge. Good agents like to work with other good agents. Finally, keep in mind that a listing agent might not even consider working with an unrepresented buyer.

Look for opportunities to increase the home’s value

Baby boomers and preceding generations could more or less count on staying in their homes for many years and, in turn, their homes’ steady increase in value over time. After the market downturn, however, that’s not the case. Because they’re so mobile, Gen X-ers and Gen Y-ers in particular should steer clear of buying the best home on the best block. Instead, look for ways to add value. Look at homes that don’t show well, are marketed poorly or are outdated. Don’t be afraid of doing light remodeling or making smart improvements that will add value. If you have to sell your home sooner than you’d planned, you’re covered.

Shanghai Housing Market Posts Huge Sales Spike | Katonah Realtor

Despite New Health Law, Some See Sharp Rise in Premiums | Katonah NY Real Estate

Particularly vulnerable to the high rates are small businesses and people who do not have employer-provided insurance and must buy it on their own.

In California, Aetna is proposing rate increases of as much as 22 percent, Anthem Blue Cross 26 percent and Blue Shield of California 20 percent for some of those policy holders, according to the insurers’ filings with the state for 2013. These rate requests are all the more striking after a 39 percent rise sought by Anthem Blue Cross in 2010 helped give impetus to the law, known as the Affordable Care Act, which was passed the same year and will not be fully in effect until 2014.

In other states, like Florida and Ohio, insurers have been able to raise rates by at least 20 percent for some policy holders. The rate increases can amount to several hundred dollars a month.

The proposed increases compare with about 4 percent for families with employer-based policies.

Under the health care law, regulators are now required to review any request for a rate increase of 10 percent or more; the requests are posted on a federal Web site, healthcare.gov, along with regulators’ evaluations.

The review process not only reveals the sharp disparity in the rates themselves, it also demonstrates the striking difference between places like New York, one of the 37 states where legislatures have given regulators some authority to deny or roll back rates deemed excessive, and California, which is among the states that do not have that ability.

New York, for example, recently used its sweeping powers to hold rate increases for 2013 in the individual and small group markets to under 10 percent. California can review rate requests for technical errors but cannot deny rate increases.

The double-digit requests in some states are being made despite evidence that overall health care costs appear to have slowed in recent years, increasing in the single digits annually as many people put off treatment because of the weak economy. PricewaterhouseCoopers estimates that costs may increase just 7.5 percent next year, well below the rate increases being sought by some insurers. But the companies counter that medical costs for some policy holders are rising much faster than the average, suggesting they are in a sicker population. Federal regulators contend that premiums would be higher still without the law, which also sets limits on profits and administrative costs and provides for rebates if insurers exceed those limits.

Critics, like Dave Jones, the California insurance commissioner and one of two health plan regulators in that state, said that without a federal provision giving all regulators the ability to deny excessive rate increases, some insurance companies can raise rates as much as they did before the law was enacted.

“This is business as usual,” Mr. Jones said. “It’s a huge loophole in the Affordable Care Act,” he said.

While Mr. Jones has not yet weighed in on the insurers’ most recent requests, he is pushing for a state law that will give him that authority. Without legislative action, the state can only question the basis for the high rates, sometimes resulting in the insurer withdrawing or modifying the proposed rate increase.

The California insurers say they have no choice but to raise premiums if their underlying medical costs have increased. “We need these rates to even come reasonably close to covering the expenses of this population,” said Tom Epstein, a spokesman for Blue Shield of California. The insurer is requesting a range of increases, which average about 12 percent for 2013.

Although rates paid by employers are more closely tracked than rates for individuals and small businesses, policy experts say the law has probably kept at least some rates lower than they otherwise would have been.

“There’s no question that review of rates makes a difference, that it results in lower rates paid by consumers and small businesses,” said Larry Levitt, an executive at the Kaiser Family Foundation, which estimated in an October report that rate review was responsible for lowering premiums for one out of every five filings.

Federal officials say the law has resulted in significant savings. “The health care law includes new tools to hold insurers accountable for premium hikes and give rebates to consumers,” said Brian Cook, a spokesman for Medicare, which is helping to oversee the insurance reforms.

“Insurers have already paid $1.1 billion in rebates, and rate review programs have helped save consumers an additional $1 billion in lower premiums,” he said. If insurers collect premiums and do not spend at least 80 cents out of every dollar on care for their customers, the law requires them to refund the excess.

As a result of the review process, federal officials say, rates were reduced, on average, by nearly three percentage points, according to a report issued last September.

In New York, for example, state regulators recently approved increases that were much lower than insurers initially requested for 2013, taking into account the insurers’ medical costs, how much money went to administrative expenses and profit and how exactly the companies were allocating costs among offerings. “This is critical to holding down health care costs and holding insurance companies accountable,” Gov. Andrew M. Cuomo said.

While insurers in New York, on average, requested a 9.5 percent increase for individual policies, they were granted an increase of just 4.5 percent, according to the latest state averages, which have not yet been made public. In the small group market, insurers asked for an increase of 15.8 percent but received approvals averaging only 9.6 percent.

But many people elsewhere have experienced significant jumps in the premiums they pay. According to the federal analysis, 36 percent of the requests to raise rates by 10 percent or more were found to be reasonable. Insurers withdrew 12 percent of those requests, 26 percent were modified and another 26 percent were found to be unreasonable.

And, in some cases, consumer advocates say insurers have gone ahead and charged what regulators described as unreasonable rates because the state had no ability to deny the increases.

Two insurers cited by federal officials last year for raising rates excessively in nine states appear to have proceeded with their plans, said Carmen Balber, the Washington director for Consumer Watchdog, an advocacy group. While the publicity surrounding the rate requests may have drawn more attention to what the insurers were doing, regulators “weren’t getting any results by doing that,” she said.

Some consumer advocates and policy experts say the insurers may be increasing rates for fear of charging too little, and they may be less afraid of having to refund some of the money than risk losing money.

Many insurance regulators say the high rates are caused by rising health care costs. In Iowa, for example, Wellmark Blue Cross Blue Shield, a nonprofit insurer, has requested a 12 to 13 percent increase for some customers. Susan E. Voss, the state’s insurance commissioner, said there might not be any reason for regulators to deny the increase as unjustified. Last year, after looking at actuarial reviews, Ms. Voss approved a 9 percent increase requested by the same insurer.

“There’s a four-letter word called math,” Ms. Voss said, referring to the underlying medical costs that help determine what an insurer should charge in premiums. Health costs are rising, especially in Iowa, she said, where hospital mergers allow the larger systems to use their size to negotiate higher prices. “It’s justified.”

Some consumer advocates say the continued double-digit increases are a sign that the insurance industry needs to operate under new rules. Often, rates soar because insurers are operating plans that are closed to new customers, creating a pool of people with expensive medical conditions that become increasingly costly to insure.

While employers may be able to raise deductibles or co-payments as a way of reducing the cost of premiums, the insurer typically does not have that flexibility. And because insurers now take into account someone’s health, age and sex in deciding how much to charge, and whether to offer coverage at all, people with existing medical conditions are frequently unable to shop for better policies.

In many of these cases, the costs are increasing significantly, and the rates therefore cannot be determined to be unreasonable. “When you’re allowed medical underwriting and to close blocks of business, rate review will not affect this,” said Lynn Quincy, senior health policy analyst for Consumers Union.

The practice of medical underwriting — being able to consider the health of a prospective policy holder before deciding whether to offer coverage and what rate to charge — will no longer be permitted after 2014 under the health care law.